Benchmarking Producer-Consumer Implementations

Jul 26, 2024

7 mins read

In this post we are concerned with Single Producer Multiple Consumers (SPMC) solutions for exchanging data in a multithreaded application. We compare performance of several locking and lock-free implementations under realistic conditions common for audio and other streaming applications.

The Problem

We consider a system in which sample data are captured from one or more sensors at a regular interval. A good example is a multichannel audio card. A device driver usually invokes a user callback and passes the input samples as a parameter. The callback must finish its execution before the next block of samples becomes available, at which point it is invoked again.

As with most multithreaded applications the main performance bottleneck is caused by the need to synchronize the producer and consumer threads to avoid race conditions. Failure to do so in a C++ application results in Undefined Behaviour. It is important to understand that Undefined Behaviour (UB) means that the result of the whole program becomes undefined and not just the operation that caused it. Upon encountering Undefined Behaviour a valid C++ program is free to take any actions including terminating immediately. Due to the overhead of identifying race conditions and UB most compilers assume that UB never happens, and thus a defective application will compile and run, and the error might never be discovered.

Possible Solutions

All synchronization mechanisms fall into two broad categories: locking and lock-free. The former rely on mutexes or semaphores to avoid race conditions where the same memory address is read and written simultaneously by two threads. Reading the same memory from multiple threads does not introduce a race condition and is in fact encouraged to increase performance.

Lock-free synchronization uses atomic counters updated by the producer (writer) thread before it starts and after it finishes writing. A consumer thread checks the value of the counter before and after the read operation.

Locking with mutexes

Mutexes (MUTual EXclusions) guarantee that write and read operations do not happen at the same time. The C++ standard library has std::mutex since C++11. As the name suggests, it provides exclusive access to the locked section of the code to only one thread, while other threads trying to acquire this mutex are blocked. std::shared_mutex introduced in C++17 provides a finer grain synchronization by adding lock_shared and unlock_shared functionality. This way several reader threads (consumers) can access the same resource simultaneously (shared lock), while the writer thread is blocked until it is able to acquire the exclusive mutex.

Lock-free solutions

The idea behind Sequential Lock (SeqLock) solution is to use an atomic integer counter as a lock-free and wait-free synchronization mechanism. The producer increments the counter before starting and after finishing the write operation. Thus, an even counter indicates the data has been written and could be read. Since there is only one producer in this architecture writing is lock-free. In a wait-free implementation the consumer checks the counter before and after performing the read operation. If both counters are even and equal the read succeeds, otherwise the attempt is repeated.

Unless the data being exchanged is atomic itself, this solution suffers from race conditions. Strictly speaking, each unsuccessful read causes undefined behaviour, and technically the program is incorrect. Subsequent retries mitigate the problem of “torn” reads and the SeqLock solution works in practice.

Another issue with SeqLock is caused by compiler optimizations. A compiler can change the order in which memory reads and writes are performed. In the compiled code the second atomic counter increment in the producer algorithm can occur before the data is written, or both increments can happen after it. On some architectures like x86 this can be prevented by calling std::atomic_signal_fence(std::memory_order_acq_rel). This approach does not work on ARM and other means of preventing compiler optimization have to be employed.

Message Queues

Message queue libraries like ZeroMQ provide in-process communication between threads. These solutions introduce a substantial overhead and are expected to be much less performant. The main appeal of message queues is their flexibility. The same system can be configured to run as a single multithreaded/multiprocess application using in-process/interprocess protocols, a distributed system using networking protocols, or a hybrid application.

HDF5 Single Writer Multiple Readers

If one of the consumers in your system is a recorder that saves data to a file you might consider HDF5 with its Single-Writer-Multiple-Readers option enabled. A lot of work has been done my the HDF5 Foundation recently to improve the performance, and it would be interesting to see how it compares with ZeroMQ and other solutions. This however is a subject for future posts.

Performance metrics

The performance of SPMC implementations is usually measured in a number of read/writes per second or equivalently, in duration of a single operation. A minimum data size of 64 bit is commonly used to benchmark the performance of a synchronization mechanism itself and decouple it from memory copies.

In this benchmark we adopt a more pragmatic approach where the performance of SPMC is measured using realistic data blocks starting from 64 double precision samples. The number of consumers is also limited to reflect the number of applications or services typical to complex processing systems. Performance is expressed as time in nanoseconds required to perform a single read and write operation.

Implementation

Benchmark source code is available on GitHub. The SeqLock solution is borrowed almost verbatim from Erik Rigtorp’s repository.

The tests were run on a Dell Latitude laptop with Intel i5-7200U CPU and 8 GB of memory. Thread sanitizer in gcc-14 identified race conditions in the SeqLock code confirming the concerns about correctness of the implementation in terms of strict compliance with the language standard.

Results

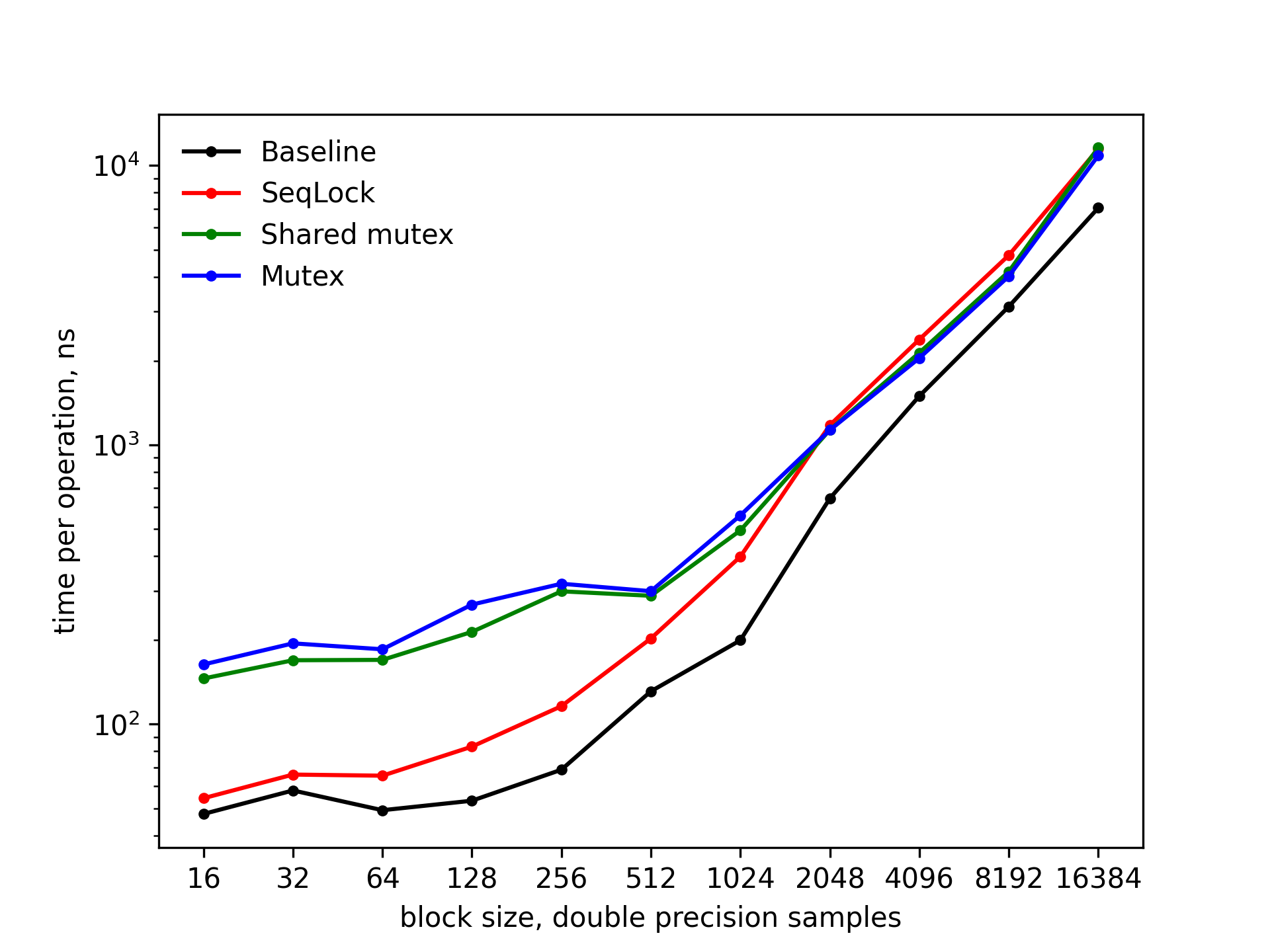

The figure below shows the time per one write operation as a function of block size for the three implementations. The baseline curve represents the time of memcpy operations only. The Y-axis is in logarithmic scale. The number of readers in this example was set to three and the ring buffer size was fixed at 10 blocks.

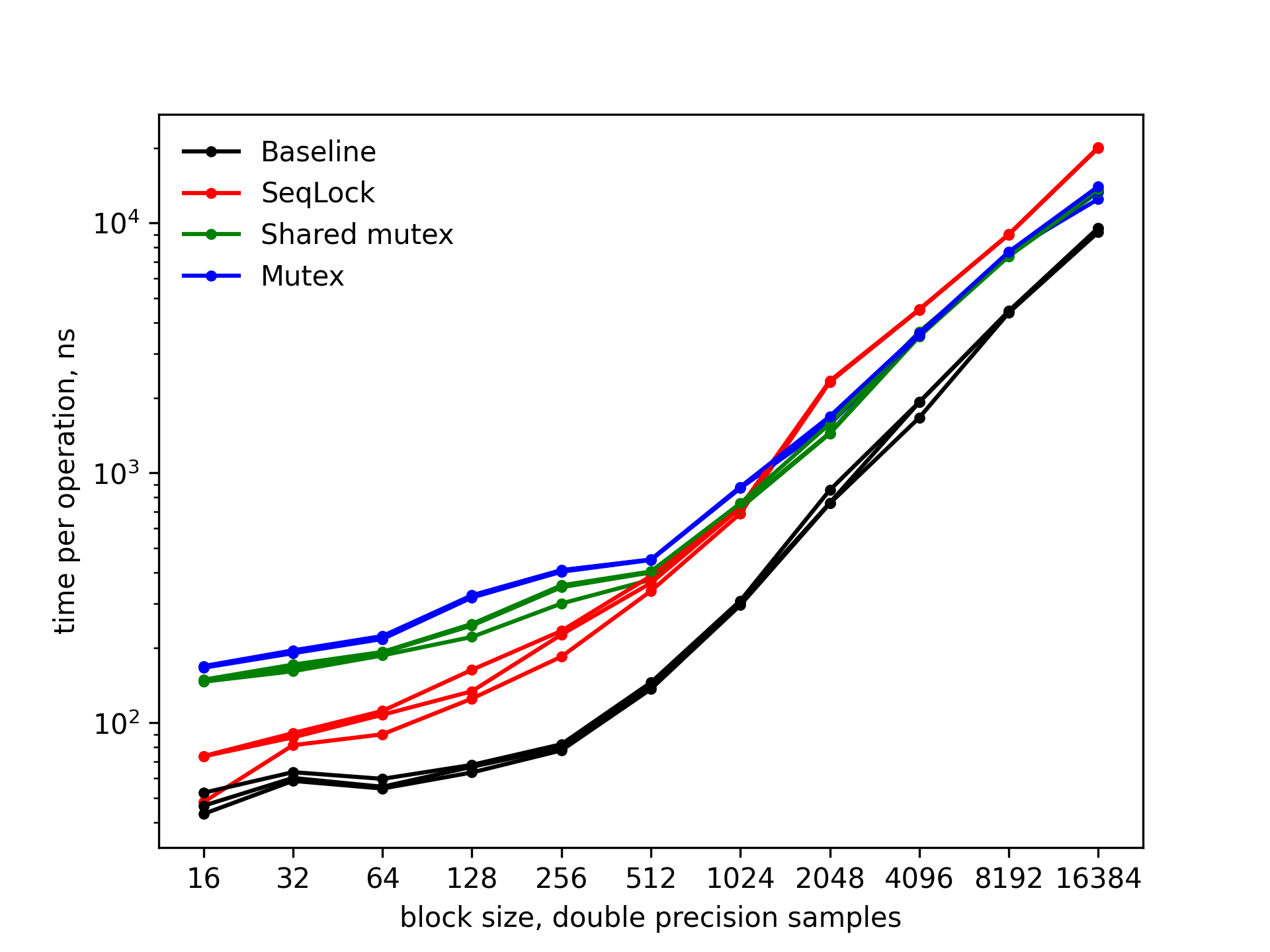

The next figure shows similar results for each of the three reader threads.

The first thing to notice is that the overhead introduced by atomic counter operations in the SeqLock implementation is small but still noticeable. Otherwise, the results were as expected. Read and write benchmarks demonstrate the same behaviour as the data size increases.

For block sizes below 512 samples the SeqLock demonstrates the best performance. As the time required to copy data increases the advantage of the lock-free solution diminishes until it becomes slower than the mutex solutions. Repeated reads in the wait-free consumers seem to degrade the performance of the writer.

The two locking solutions closely follow each other, with the shared mutex showing slightly better performance. Both implementations demonstrate improved performance as the block size grows.

ZeroMQ results were somewhat disappointing, as they were at least an order of magnitude slower than the mutex solution, rendering it impractical for large throughput in-process data exchange.

Conclusion

A few observations follow from these simple benchmark tests. Firstly, even a fast lock-free and wait-free synchronization algorithm like SeqLock introduces a measurable overhead. Second, the mutex implementations in the standard C++ library are quite good and should be your preferred solution unless you need to squeeze the last bits of performance out of your code.

And finally, software engineering involves much more than just writing the fastest code. If you are working in a regulated environment like medical, aviation or defence industry, you need to consider standard compliance and code correctness. These factors and cross-platform compatibility could dictate the choice of algorithms and their implementations.